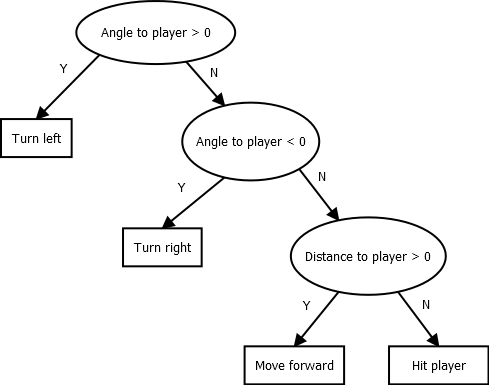

class: center, middle # Artificial Intelligence ## Intelligent Agents ### I 2020 --- class: center, middle # Intelligent Agents --- # Agents What is an agent? --- # Agents * An *agent* is an entity that **perceives** its **environment** and **acts** on it * Some/Many people frown upon saying that something is "an AI" and prefer the term "agent" * Agents come in many different forms - Robots - Software - One could view humans or other living entities as agents --- class: medium # PEAS * **P**erformance: How do we measure the quality of the agent (e.g. score, player enjoyments) * **E**nvironment: What surroundings is the agent located in (a game, house for a vacuum robot, ...) * **A**ctuators: Which actions can the agent perform (e.g. move, remove dirt, ...) * **S**ensors: How does the agent perceive the world (game data, screen shots, cameras, text input, ...) --- class: medium # PEAS What's the PEAS for a self-driving car? * **P**erformance? * **E**nvironment? * **A**ctuators? * **S**ensors? --- # Rational Agents "For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has." - *AI: A Modern Approach*, Russel and Norvig --- # Performance Measure * We want to tell the agent what it should "achieve" * Generally, we want to avoid thinking about "how" the agent will achieve this goal * However, there are many examples where an agent might "exploit" an underspecified goal --- # Exploiting Coast Runners <iframe width="560" height="315" src="https://www.youtube.com/embed/tlOIHko8ySg" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe> --- # Agent Knowledge "... a rational agent should select an action that is **expected** to maximize its performance measure ..." * An agent may choose the "wrong" action * For example, in Poker, an agent may choose to fold when they would have won * But as long as the **expectation** (in this case: expected value, using probabilities) was correct, the agent behaved rationally * Rationality does **not** require omniscience --- # The Structure of Agents * Each agent has an *architecture* and a *program* * The architecture defines the sensors and actuators (inputs and outputs) for our agent * The program is the behavior, the intelligence, of the agent --- class: mmedium # Agents in Games * Say you have some NPC character in your game that should be controlled by AI * Your game typically contains some main loop that updates all game objects and renders them * At some points you run an AI update * This means, all our agents receive one "update" call every x ms, and this update call has to make the necessary decisions * Simplest approach: On each update, the agent reads the sensor values and calculates which actuators to use based on these values --- # Braitenberg Vehicles * Valentino Braitenberg proposed a thought experiment with simple two-wheel vehicles * The vehicles had two light sensors, and there was a light in the room * Each of the two sensors would be connected to one of the wheels * Depending on how this was done, the vehicle would seek or flee from the light * The behavior of the agent is fully reactive, with no memory --- # Braitenberg Vehicles <img src="/PF-3341/assets/img/braitenberg.png" width="70%"/> --- # A first agent: An enemy in an ARPG * Performance: How much damage it can do to the player? * Environment: A dungeon in the game * Actuators: Rotate, move forward, hit * Sensors: Player position (With that we can compute distance and angle to the player) --- # Behavior for our ARPG agent * If the angle to the player is greater than 0, turn left * (else) If the angle to the player is less than 0, turn right * (else) If the distance to the player is greater than 0, move forward * (else) Hit the player --- # Limitations * This is, of course, a very simple agent * Imagine if there were walls * What if we want the enemy to have different modes of engagement, flee when it is in danger, etc.? * How did we even come up with these conditions? * How could we make this a bit friendlier to edit? --- # Decision Trees  --- # Decision Trees: Limitations * We haven't actually changed anything from the if statements (other than drawing them) * Designing a decision tree is still a lot of manual work * There's also no persistence, the agent will decide a new behavior every time the tree is evaluated * There is one nice thing: Decision trees can (sometimes) be learned with Machine Learning techniques --- class: center, middle # Finite State Machines --- # States? * Say we want our enemy to attack more aggressively if they have a lot of health and try to flee when they become wounded * In other words: The enemy has a *state* that determines what they do, in addition to their inputs and outputs * But we'll need new sensors: The enemy needs to know their own health level * Let's also give them a ranged weapon --- # Finite State Machines * *States* represent what the agent is currently supposed to do * Each state is associated with *actions* the agent should perform in that state * *Transitions* between the states observe the sensors and change the state when a condition is met * The agent starts in some designated state, and can only be in one state at a time --- # Finite State Machines <img src="/PF-3341/assets/img/fsm.png" width="100%"/> --- class: mmedium # Finite State Machines: Limitations * There's no real concept of "time", it has to be "added" * If you just want to add one state you have to determine how it relates to every other state * If you have two Finite State Machines they are hard to compose * It's also kind of hard to reuse subparts * For example: The parts of our state machine that is used to engage an enemy at range could be useful for an archer guard on a wall, but how do we take *just* those parts? --- # Hierarchical Finite State Machines * Finite State Machines define the behavior of the agent * But we said the nodes are behaviors?! * We can make each node another sub-machine! * This leads to *some* reusability, and eases authoring --- class: center, middle # Behavior Trees --- class: small # Behavior Trees * Let's still use a graph, but make it a tree! * If we have a subtree, we now only need to worry about one connection: its parent * The *leafs* of the tree will be the actual actions, while the interior nodes define the decisions * Each node can either be successful or not, which is what the interior nodes use for the decisions * We can have different kinds of nodes for different kinds of decisions * This is extensible (new kinds of nodes), easily configurable (just attach different nodes together to make tree) and reusable (subtrees can be used multiple times) --- class: small # Behavior Trees * Every AI time step the root node of the tree is executed * Each node saves its state: - Which child is currently executing for interior nodes - Which state the execution is in for leaf nodes * When a node is executed, it executes its currently executing child * When a leaf node is executed and finishes, it returns success or failure to its parent * The parent then makes a decision based on this result --- # Behavior Trees: Common Node types * Choice/Selector: Execute children in order until one succeeds * Sequence: Execute children in order until one fails * Loop: Keep executing child (or children) until one fails * Random choice: Execute one of the children at random * etc. --- # Behavior Trees: How do you make an "if" statement? * Some actions are just "checks", they return success iff the check passes * A *sequence* consisting of a check and another node will only execute the second node if the check passes * If we put multiple such sequences as children of a choice, the first sequence with a passing condition will be executed --- # Behavior Trees <img src="/PF-3341/assets/img/bt.png" width="100%"/> --- class: center, middle # Let's make a Behavior Tree for our ARPG enemy! --- # Behavior Trees * Behavior Trees are a very powerful technique and widely used in games * Halo 2, for example, used them * Unreal Engine has built-in support for Behavior Trees (there are plugins for Unity) * The tree structure usually allows for visual editing (which Unreal Engine also has built-in) --- # Behavior Trees in Unreal Engine <img src="/PF-3341/assets/img/bteditor.jpg" width="70%"/> --- class: center, middle # Environments --- class: small # Environments * Observability * Single-agent/multi-agent * Deterministic or stochastic * Episodic or sequential * Static or dynamic * Discrete or continuous * Known or unknown --- # Observability * In some environments, the agent has full knowledge * Often, though, they can not "see" everything * For example: In Poker the opponent's cards are hidden --- class: medium # Single vs. Multi-Agent * Single-Agent: The agent does not have to "worry" about what other agents are doing * Multi-Agent: There are other entities in the world that pursue goals * For example, "physics" is not really an agent, it has no goal * In games we often have opponents, and need to perform actions that take into account what the opponent is likely going to do --- # Deterministic or Stochastic * Deterministic: Each action always does the same thing, and nothing else changes the world * Stochastic: The state of the world may change by chance, or actions may have random effects * Note: Poker is **not** stochastic: The deck has a predetermined order (even if it is unknown to the agent) * Often, however, we abstract away unobservable information as stochasticity (self-driving cars) --- # Episodic vs. Sequential * Episodic: Each action is independent from other actions * Sequential: An action may have effects on future actions * Most games are sequential: If we perform an action, we change what we do in the future * Many tasks are episodic, though: Spam filtering, face recognition, path finding, etc. --- # Static vs. Dynamic * Static: The environment does not change if the agent does nothing * Dynamic: While the agent deliberates or acts, the environment may change * Note: This change may be due to "physics", or other agents * Semi-dynamic: The environment does not change, but the score does (chess clock) --- # Discrete vs. Continuous * Discrete: There is a finite/countable number of states and time moves in steps * Continuous: There is an uncountable number of states (like positions of a car in the real world), or time moves continuously * We can often discretize continuous variables * Many of our AI algorithms' complexity depends on the number of possible states --- # Known vs. Unknown * Known: The agent knows how the environment "works" (the "rules") * Unknown: The agent has to determine what each action does * Technically this isn't a property of the environment itself, but of the agent * For example, we can build an agent that knows the rules of Poker, or we could have one that learns them by trial and error --- # Environments <img src="/CI-0129/assets/img/environments.png" width="70%"/> --- # Environments * Each of these different properties of environments poses different challenges for the agent * In this class, we will discuss several different algorithms, each of which addresses some of these challenges * The first environments we look at will be observable, single-agent, deterministic, episodic, static, discrete and known --- # Next Time * Reminder: Find your groups and work on some project ideas * Be prepared to present online * Exact modality will be communicated --- # References * [Finite State Machines for Game AI (ES)](https://gamedevelopment.tutsplus.com/es/tutorials/finite-state-machines-theory-and-implementation--gamedev-11867) * [Halo 2 AI using Behavior Trees](http://www.gamasutra.com/view/feature/130663/gdc_2005_proceeding_handling_.php)