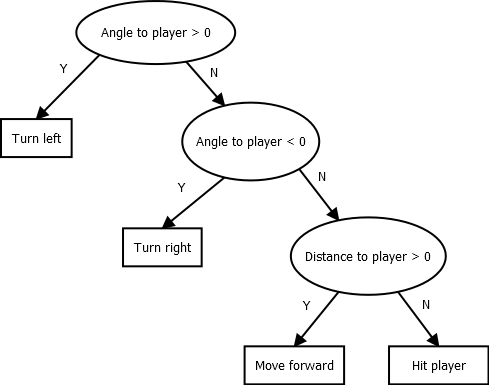

class: center, middle # Artificial Intelligence ## Intelligent Agents --- class: center, middle # Introduction --- class: center, middle # What is Artificial Intelligence? --- # What is AI * No one really agrees on what "AI" is exactly * As Douglas R. Hofstadter said "AI is whatever hasn't been done yet" * Pathfinding "was" once AI * Many people also confuse/conflate AI and Machine Learning --- # Artificial Intelligence <img src="/CI-0129/assets/img/ai.png" width="80%"/> .footnote[Source: *AI: A Modern Approach*, Russel and Norvig] --- # Act Humanly: The Turing Test * Imagine sitting in front of a computer, chatting with someone * You can ask questions, the other "person" answers * After the conversation you are asked: Were you talking to a person or a computer * What if you are talking to a computer, but think it is a person? The computer "passed the Turing Test" --- # The Turing Test Why is the Turing Test hard? -- * Natural Language Understanding * Knowledge Representation: Remembering what you say/what it knows * Reasoning: Deducing new knowledge from the information you provide * Learning? --- # Think Humanly * Observe/test the human thought process for various problems * Come up with algorithms/methods that mirror that process * Apply them to new problems of the same type * Result: "Intelligence" --- class: medium # Think Rationally * *Logic* is a set of languages to model relationships between facts * There are usually rules that determine how new information can be deduced * A computer program can use these rules to **prove** facts about the world * Logic is widely used in AI, because it can do/represent "anything" (in theory) * In practice, there are two main limitations: computational resources, and representational range --- # Act Rationally * Rather than imitating a human, we are often just interested in some particular task * Our goal is to develop a program/robot to perform that task, a (rational) *agent* * The agent actually *does* something, it *acts* * Our goal is for the agent to perform the "best possible" action --- # Best Possible Action? <img src="/CI-0129/assets/img/trolleyproblem.png" width="100%"/> --- # The Moral Machine <img src="/CI-0129/assets/img/moralmachine.png" width="80%"/> --- class: center, middle # History and Extent of AI --- class: medium # History of AI * Starting in 1943, researchers took inspiration from the human brain, mixed it with Logic, and some theory of computation and created the first "Neural Networks" (McCulloch and Pitts (1943), Hebb (1949)) * Marvin Minsky (1950) built the first Neural Network computer, and also wrote about the limitations of this approach * Turing (1950) proposed the Turing Test as a "goal" for AI research * A workshop in Dartmouth in 1956 established the term "Artificial Intelligence" --- # History of AI * One of the first AI agents was developed by Arthur Samuel in 1952 to play Checkers * Gelernter (1959) created a Geometry Theorem Prover * Newell and Simon developed a General Problem Solver (1950s-1960s) * John McCarthy developed LISP (1958) --- # History of AI Herbert Simon in 1957: " It is not my aim to surprise or shock you—but the simplest way I can summarize is to say that there are now in the world machines that think, that learn and that create. Moreover, their ability to do these things is going to increase rapidly until—in a visible future—the range of problems they can handle will be coextensive with the range to which the human mind has been applied. " --- class: medium # History of AI * Early AI research made rapid progress (from "nothing" to "playing Checkers really well") * Machine translation was an early stumbling block: "there has been no machine translation of general scientific text, and none is in immediate prospect." (1966) * Combinatorial explosion was a problem: Small problems worked, larger problems became intractable (Lighthill, 1973) * Machine Learning research funding was severely cut in the 1970s --- class: medium # AI Winter * In the 1980s, the focus was more on combinatorial/logic-based AI * It too suffered from grand promises it could not keep at the time * LISP machines (specialized computers for LISP) were replaced by general purpose computers * As a result, in the late 1980s, general AI research funding was also severly reduced --- class: medium # The Boom * Researchers developed smarter algorithms/heuristics to overcome the combinatorial explosion for some applications * The goals shifted: Instead of building a "human-like" AI, research became (even) more task-focused * With better computers, larger neural networks became possible * In 1997 Deep Blue beat Gary Kasparov at Chess * Computers advanced, larger data sets became available --- # Today * Many developments of early AI are now taken for granted (pathfinding) * Classical/logic-based AI has many applications * Deep Learning is "everywhere" --- # Why do we talk about this? <img src="/CI-0129/assets/img/soberingmessage.png" width="100%"/> .footnote[Source: Wired.com] --- # Artificial Intelligence and Machine Learning <img src="/CI-0129/assets/img/AIvsML.png" width="100%"/> --- # Artificial Intelligence .big[ "Everything in AI is either representation or search" ] -Dave Roberts, NC State University --- # Representations * Logic is one representation * Neural Networks are nothing more than non-linear functions (a representation of functions) * Graphs, vectors, word counts, etc. are all different representations * One challenge for AI is to convert a real-world task (e.g. translation) into a suitable **representation** --- # Search * Once we have represented our problem suitably, we want a solution * In an AI problem we do not know how to get the solution (that's the "intelligence" part) * We therefore need to **search** through the space of possible solutions * Note: *Optimization* is a kind of search: Find "the best" value --- # Machine Learning * What is Machine Learning? * We have some data, that we need to put in a suitable form (Representation) * Then we optimize parameters of some model to get the best possible fit for the data (Search) * Note: There are many different representations, and many different search strategies! --- class: center, middle # Intelligent Agents --- # Agents What is an agent? --- # Agents * An *agent* is an entity that **perceives** its **environment** and **acts** on it * Some/Many people frown upon saying that something is "an AI" and prefer the term "agent" * Agents come in many different forms - Robots - Software - One could view humans or other living entities as agents --- class: medium # PEAS * **P**erformance: How do we measure the quality of the agent (e.g. score, player enjoyment, cleanliness of the house ...) * **E**nvironment: What surroundings is the agent located in (a game, house for a vacuum robot, ...) * **A**ctuators: Which actions can the agent perform (e.g. move, remove dirt, ...) * **S**ensors: How does the agent perceive the world (game data, screen shots, cameras, text input, ...) --- class: medium # PEAS What's the PEAS for a self-driving car? * **P**erformance? * **E**nvironment? * **A**ctuators? * **S**ensors? --- # Rational Agents "For each possible percept sequence, a rational agent should select an action that is expected to maximize its performance measure, given the evidence provided by the percept sequence and whatever built-in knowledge the agent has." - *AI: A Modern Approach*, Russel and Norvig --- # Performance Measure * We want to tell the agent what it should "achieve" * Generally, we want to avoid thinking about "how" the agent will achieve this goal * However, there are many examples where an agent might "exploit" an underspecified goal --- # Exploiting Coast Runners <iframe width="560" height="315" src="https://www.youtube.com/embed/tlOIHko8ySg" frameborder="0" allow="accelerometer; autoplay; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe> --- # Agent Knowledge "... a rational agent should select an action that is **expected** to maximize its performance measure ..." * An agent may choose the "wrong" action * For example, in Poker, an agent may choose to fold when they would have won * But as long as the **expectation** (in this case: expected value, using probabilities) was correct, the agent behaved rationally * Rationality does **not** require omniscience --- # The Structure of Agents * Each agent has an *architecture* and a *program* * The architecture defines the sensors and actuators (inputs and outputs) for our agent * The program is the behavior, the intelligence, of the agent --- class: mmedium # Agents in Games * You are making a video game that has some NPC that should be controlled by AI * Your game typically contains some main loop that updates all game objects and renders them * At some points you run an AI update * This means, all our agents receive one "update" call every x ms, and this update call has to make the necessary decisions * Simplest approach: On each update, the agent reads the sensor values and calculates which actuators to use based on these values --- # Braitenberg Vehicles * Valentino Braitenberg proposed a thought experiment with simple two-wheel vehicles * The vehicles had two light sensors, and there was a light in the room * Each of the two sensors would be connected to one of the wheels * Depending on how this was done, the vehicle would seek or flee from the light * The behavior of the agent is fully reactive, with no memory --- # Braitenberg Vehicles <img src="/PF-3341/assets/img/braitenberg.png" width="70%"/> --- # A first agent: An enemy in an ARPG * Performance: How much damage it can do to the player? * Environment: A dungeon in the game * Actuators: Rotate, move forward, hit * Sensors: Player position (With that we can compute distance and angle to the player) --- # Behavior for our ARPG agent * If the angle to the player is greater than 0, turn left * (else) If the angle to the player is less than 0, turn right * (else) If the distance to the player is greater than 0, move forward * (else) Hit the player --- # Limitations * This is, of course, a very simple agent * Imagine if there were walls * What if we want the enemy to have different modes of engagement, flee when it is in danger, etc.? * How did we even come up with these conditions? * How could we make this a bit friendlier to edit? --- # Decision Trees  --- # Decision Trees: Limitations * We haven't actually changed anything from the if statements (other than drawing them) * Designing a decision tree is still a lot of manual work * There's also no persistence, the agent will decide a new behavior every time the tree is evaluated * There is one nice thing: Decision trees can (sometimes) be learned with Machine Learning techniques --- class: center, middle # Finite State Machines --- # States? * Say we want our enemy to attack more aggressively if they have a lot of health and try to flee when they become wounded * In other words: The enemy has a *state* that determines what they do, in addition to their inputs and outputs * But we'll need new sensors: The enemy needs to know their own health level * Let's also give them a ranged weapon --- # Finite State Machines * *States* represent what the agent is currently supposed to do * Each state is associated with *actions* the agent should perform in that state * *Transitions* between the states observe the sensors and change the state when a condition is met * The agent starts in some designated state, and can only be in one state at a time --- # Finite State Machines <img src="/PF-3341/assets/img/fsm.png" width="100%"/> --- class: mmedium # Finite State Machines: Limitations * There's no real concept of "time", it has to be "added" * If you just want to add one state you have to determine how it relates to every other state * If you have two Finite State Machines they are hard to compose * It's also kind of hard to reuse subparts * For example: The parts of our state machine that is used to engage an enemy at range could be useful for an archer guard on a wall, but how do we take *just* those parts? --- # Hierarchical Finite State Machines * Finite State Machines define the behavior of the agent * But we said the nodes are behaviors?! * We can make each node another sub-machine! * This leads to *some* reusability, and eases authoring --- class: center, middle # Behavior Trees --- class: mmedium # Behavior Trees * Let's still use a graph, but make it a tree! * If we have a subtree, we now only need to worry about one connection: its parent * The *leafs* of the tree will be the actual actions, while the interior nodes define the decisions * Each node can either be successful or not, which is what the interior nodes use for the decisions * We can have different kinds of nodes for different kinds of decisions * This is extensible (new kinds of nodes), easily configurable (just attach different nodes together to make tree) and reusable (subtrees can be used multiple times) --- class: mmedium # Behavior Trees * Every AI time step the root node of the tree is executed * Each node saves its state: - Which child is currently executing for interior nodes - Which state the execution is in for leaf nodes * When a node is executed, it executes its currently executing child * When a leaf node is executed and finishes, it returns success or failure to its parent * The parent then makes a decision based on this result --- # Behavior Trees: Common Node types * Choice/Selector: Execute children in order until one succeeds * Sequence: Execute children in order until one fails * Loop: Keep executing child (or children) until one fails * Random choice: Execute one of the children at random * etc. --- # Behavior Trees: How do you make an "if" statement? * Some actions are just "checks", they return success iff the check passes * A *sequence* consisting of a check and another node will only execute the second node if the check passes * If we put multiple such sequences as children of a choice, the first sequence with a passing condition will be executed --- # Behavior Trees <img src="/PF-3341/assets/img/bt.png" width="100%"/> --- # Behavior Trees * Behavior Trees are a very powerful technique and widely used in games * Halo 2, for example, used them * Unreal Engine has built-in support for Behavior Trees (there are plugins for Unity) * The tree structure usually allows for visual editing (which Unreal Engine also has built-in) --- # Behavior Trees in Unreal Engine <img src="/PF-3341/assets/img/bteditor.jpg" width="70%"/> --- # References * [AI: A Modern Approach](http://aima.cs.berkeley.edu/) * [The Moral Machine](http://moralmachine.mit.edu/) * [A Sobering Message About the Future at AI's Biggest Party](https://www.wired.com/story/sobering-message-future-ai-party/) * [Faulty Reward Functions in the Wild](https://openai.com/blog/faulty-reward-functions/) * [Finite State Machines for Game AI (ES)](https://gamedevelopment.tutsplus.com/es/tutorials/finite-state-machines-theory-and-implementation--gamedev-11867) * [Halo 2 AI using Behavior Trees](http://www.gamasutra.com/view/feature/130663/gdc_2005_proceeding_handling_.php)