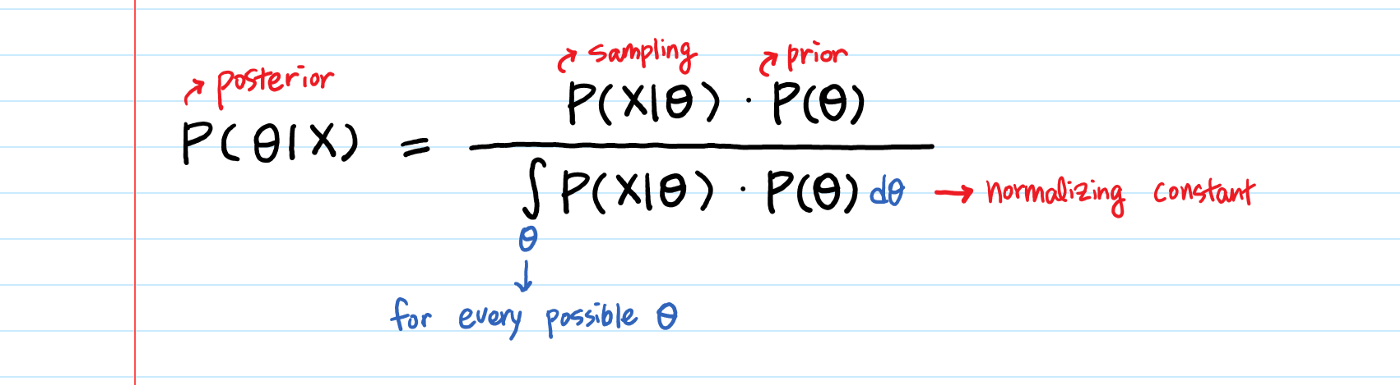

class: center, middle, inverse, title-slide # Bayesian Statistics in ML ## PCA as an extra --- # We are going to discuss today Part I * Bayesian Statistics - basics * Bayesian - why is it so complicated? * What kind of models are we talking about? * A simple example * Bayesian Statistics - DIY Part II * PCA - intuition * Different names, same technique * Formulas and math * PCA - DIY --- class: inverse, center, middle # Bayesian Statistics --- # Bayesian Statistics - basics **Probabilistic inference**: process of inferring unknown properties of a system given observations via the mechanics of probability theory. Example: suppose we want to understand some aspect of the population of the Costa Rica, such as the portion of adults who prefer Coca Cola over Pepsi. Let this unknown value be called `\(\theta\)`. How can I gain insight into this value? - **Opinions**: ask around people what they believe about the value of `\(\theta\)`. Note this opinion could be in the form of a range of possible values and/or a probability distribution. - **Data**: conduct a survey to gain some more information about `\(\theta\)`. So we contact some adults and ask them if they prefer Coca Cola over Pepsi, and record the results. Let’s call the results of this experiment `\(D\)` (for “data”). The goal of probabilistic inference is to make some statements about `\(\theta\)` given these observations. --- # Bayesian Statistics - basics Some probability rules: Sum Rule: `$$P(X) = \sum_{i=1}^{N} P(X, Y_i)$$` Product Rule: `$$P(X, Y) = P(Y | X) P(X)$$` Bayes Theorem: `$$P(Y|X) = \frac{P(X|Y) P(Y)}{\sum_{i=1}^{N} P(X|Y_i) P(Y_i)}$$` --- # Bayesian Statistics - basics  Source: https://towardsdatascience.com/bayesian-inference-intuition-and-example-148fd8fb95d6 For our example: begin with a prior belief about the value of `\(\theta\)`, represented by a prior probability distribution `\(P(\theta)\)`. To couple our observations `\(D\)` to the value of interest, we construct a probabilistic model `\(P(D|\theta)\)`, which describes how likely we would see a particular survey result `\(D\)` given a particular value of `\(\theta\)`. This posterior distribution encapsulates our entire belief about `\(\theta\)`! We can use it to answer various questions we might be about it. --- # Bayesian Statistics - basics There are four main steps to the Bayesian approach to probabilistic inference: - Likelihood. First, we construct the likelihood (or model), `\(P(D | \theta)\)`. This serves to describe the mechanism giving rise our observations `\(D\)` given a particular value of the parameter of interest `\(\theta\)`. - Prior. Next, we summarize our prior beliefs about the parameter `\(\theta\)`, which we encode via a probability distribution `\(P(\theta)\)`. - Posterior. Given some observations `\(D\)`, we obtain the posterior distribution `\(P(\theta | D)\)` using Bayes’ theorem. - Inference. We now use the posterior distribution to draw further conclusions as required. The last step is purposely open-ended. For example, we can use it to make predictions about new data (as in supervised learning), we can summarize it in various ways (for example, point estimation if we must report a single “best guess” of `\(\theta\)`), etc. --- # Bayesian Statistics - why is it complicated? Bayesian inference is a completely consistent system for probabilistic reasoning. Unfortunately, it is not without its issues, like: - Origin of priors - The meaning of probability (degrees of belief vs observed events). The two interpretations of probability agree on the axioms and theorems of probability theory. No one argues the truth of Bayes’ theorem. The main difference is that a frequentist would not allow a probability distribution to be placed on parameters, so the use of Bayes’ theorem to update beliefs about parameters in light of data is not allowed in that framework. - Intractable integrals. - [Some history](http://www.statslife.org.uk/images/pdf/timeline-of-statistics.pdf) --- # What kind of models are we talking about? Bayesian statistics encompasses a specific class of models that could be used for machine learning. Typically, one draws on Bayesian models for one or more of a variety of reasons, such as: - Having relatively few data points. - Having strong prior intuitions (from pre-existing observations/models) about how things work. - Having high levels of uncertainty, or a strong need to quantify the level of uncertainty about a particular model or comparison of models. - Wanting to claim something about the likelihood of the alternative hypothesis, rather than simply accepting/rejecting the null hypothesis. Source: https://towardsdatascience.com/will-the-sun-rise-tomorrow-introduction-to-bayesian-statistics-for-machine-learning-6324dfceac2e --- # What kind of models are we talking about? Why don't we use Bayesian Methods in ML all the time: - Most machine learning is done in the context of “big data” where the signature of Bayesian models — priors — don’t actually play much of a role. - Sampling posterior distributions in Bayesian models is computationally expensive and slow. Nevertheless, since Bayesian statistics provides a framework for updating “knowledge”, it is, in fact, used a whole lot in machine learning. Examples: Gaussian processes, simple linear regression, have Bayesian and non-Bayesian versions. There are also algorithms that are purely frequentist (e.g. support vector machines, random forest), and those that are purely Bayesian (e.g. variational inference, expectation maximization). --- # A simple example Let's go back to the Coca-Cola vs Pepsi survey. The probability of observing a case on favor of Pepsi, given the value of `\(\theta\)` (proportion of people who prefer Pepsi), comes from a binomial distribution. Since x is the sum of cases on favor of Pepsi, this is the likelihood: `\(P(x | n, \theta)\)` = `\(n \choose x\)` `\(\theta^x (1-\theta)^{n-x}\)` With the likelihood decided, we must now choose a prior distribution `\(p(\theta)\)`. A convenient prior in this case is the beta distribution, which has two parameters `\(\alpha\)` and `\(\beta\)`: `$$p(\theta | \alpha, \beta) = \frac{1}{B(\alpha,\beta)}\theta^{\alpha-1}(1-\theta)^{\beta-1}$$` The normalizing constant in this case is: `$$\int_0^1 \theta^{\alpha-1}(1-\theta)^{\beta-1}d \theta$$` --- # A simple example The posterior distribution: $$p(\theta | x, n, \alpha, \beta) = \frac{P(x | n, \theta)p(\theta | \alpha, \beta)}{\int P(x | n, \theta)p(\theta | \alpha, \beta)d \theta} $$ It can be shown that this is equal to: `$$p(\theta | x, n, \alpha, \beta) = Beta(\alpha+x, \beta+n-x)$$` <img src="/PF-3115/assets/img/bayes2.png" alt="bayes2" width="300"/> --- # Bayesian Statistics - DIY - If you want to do this exercise at home, it will help you to understand how Bayesian inference works in practice [Bayesian Coin Flip](https://nbviewer.jupyter.org/github/lambdafu/notebook/blob/master/math/Bayesian%20Coin%20Flip.ipynb) - If you are interested in taking a whole class about Bayesian Methods and Machine Learning, I strongly recommend this one: https://www.cse.wustl.edu/~garnett/cse515t/spring_2017/ --- class: inverse, center, middle # PCA ## Principal Components Analysis --- # PCA https://notsquirrel.com/pca/ --- # PCA - DIY If you want to do PCA in Python, you can follow this tutorial from Stat Quest https://www.youtube.com/watch?v=Lsue2gEM9D0 Practical tips: https://www.youtube.com/watch?v=oRvgq966yZg --- class: center, middle, inverse Slides creadas via R package [**xaringan**](https://github.com/yihui/xaringan). El chakra viene de [remark.js](https://remarkjs.com), [**knitr**](http://yihui.name/knitr), and [R Markdown](https://rmarkdown.rstudio.com).