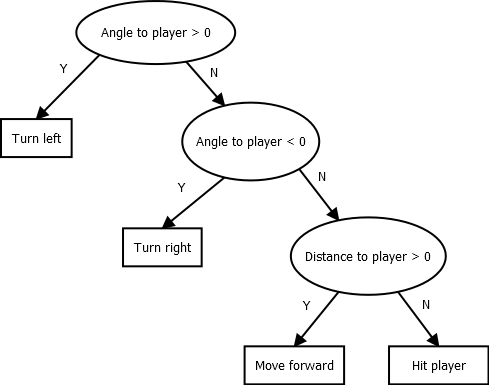

class: center, middle # AI in Digital Entertainment ### AI Behavior --- class: center, middle # Intelligent Agents --- # Agents * An *agent* is an entity that perceives its environment and acts on it * Some/Many people frown upon saying that something is "an AI" and prefer the term "agent" * Agents come in many different forms - Robots - Software - One could view humans or other living entities as agents --- class: medium # PEAS * **P**erformance: How do we measure the quality of the agent (e.g. score, player enjoyments) * **E**nvironment: What surroundings is the agent located in (for us typically a game, but which part of the game) * **A**ctuators: Which actions can the agent perform (e.g. move, shoot a fireball, ...) * **S**ensors: How does the agent perceive the world (in games we typically give it access to some data structures representing the game, but some researchers work on playing games using screen captures) --- class: small # Agents in Games * Say you have some NPC character in your game that should be controlled by AI * Your game typically contains some main loop that updates all game objects and renders them * At some points you run an AI update * This means, all our agents receive one "update" call every x ms, and this update call has to make the necessary decisions * Simplest approach: On each update, the agent reads the sensor values calculates the which actuators to use based on these values --- # Braitenberg Vehicles * Valentino Braitenberg proposed a thought experiment with simple two-wheel vehicles * The vehicles had two light sensors, and there was a light in the room * Each of the two sensors would be connected to one of the wheels * Depending on how this was done, the vehicle would seek or flee from the light * The behavior of the agent is fully reactive, with no memory --- # Braitenberg Vehicles <img src="/PF-3341/assets/img/braitenberg.png" width="70%"/> --- # A first agent: An enemy in an ARPG * Performance: How much damage it can do to the player? * Environment: A dungeon in the game * Actuators: Rotate, move forward, hit * Sensors: Player position (With that we can compute distance and angle to the player) --- # Behavior for our ARPG agent * If the angle to the player is greater than 0, turn left * (else) If the angle to the player is less than 0, turn right * (else) If the distance to the player is greater than 0, move forward * (else) Hit the player --- # Limitations * This is, of course, a very simple agent * Imagine if there were walls * What if we want the enemy to have different modes of engagement, flee when it is in danger, etc.? * How did we even come up with these conditions? * How could we make this a bit friendlier to edit? --- # Decision Trees  --- # Decision Trees: Limitations * We haven't actually changed anything from the if statements (other than drawing them) * Designing a decision tree is still a lot of manual work * There's also no persistence, the agent will decide a new behavior every time the tree is evaluated * There is one nice thing: Decision trees can (sometimes) be learned with Machine Learning techniques --- class: center, middle # Finite State Machines --- # States? * Say we want our enemy to attack more aggressively if they have a lot of health and try to flee when they become wounded * In other words: The enemy has a *state* that determines what they do, in addition to their inputs and outputs * But we'll need new sensors: The enemy needs to know their own health level * Let's also give them a ranged weapon --- # Finite State Machines * *States* represent what the agent is currently supposed to do * Each state is associated with *actions* the agent should perform in that state * *Transitions* between the states observe the sensors and change the state when a condition is met * The agent starts in some designated state, and can only be in one state at a time --- # Finite State Machines <img src="/PF-3341/assets/img/fsm.png" width="100%"/> --- class: small # Finite State Machines: Limitations * There's no real concept of "time", it has to be "added" * If you just want to add one state you have to determine how it relates to every other state * If you have two Finite State Machines they are hard to compose * It's also kind of hard to reuse subparts * For example: The parts of our state machine that is used to engage an enemy at range could be useful for an archer guard on a wall, but how do we take *just* those parts? --- # Hierarchical Finite State Machines * Finite State Machines define the behavior of the agent * But we said the nodes are behaviors?! * We can make each node another sub-machine! * This leads to *some* reusability, and eases authoring --- class: center, middle # Behavior Trees --- class: small # Behavior Trees * Let's still use a graph, but make it a tree! * If we have a subtree, we now only need to worry about one connection: its parent * The *leafs* of the tree will be the actual actions, while the interior nodes define the decisions * Each node can either be successful or not, which is what the interior nodes use for the decisions * We can have different kinds of nodes for different kinds of decisions * This is extensible (new kinds of nodes), easily configurable (just attach different nodes together to make tree) and reusable (subtrees can be used multiple times) --- class: small # Behavior Trees * Every AI time step the root node of the tree is executed * Each node saves its state: - Which child is currently executing for interior nodes - Which state the execution is in for leaf nodes * When a node is executed, it executes its currently executing child * When a leaf node is executed and finishes, it returns success or failure to its parent * The parent then makes a decision based on this result --- # Behavior Trees: Common Node types * Choice/Selector: Execute children in order until one succeeds * Sequence: Execute children in order until one fails * Loop: Keep executing child (or children) until one fails * Random choice: Execute one of the children at random * etc. --- # Behavior Trees: How do you make an "if" statement? * Some actions are just "checks", they return success iff the check passes * A *sequence* consisting of a check and another node will only execute the second node if the check passes * If we put multiple such sequences as children of a choice, the first sequence with a passing condition will be executed --- # Behavior Trees <img src="/PF-3341/assets/img/bt.png" width="100%"/> --- class: center, middle # Let's make a Behavior Tree for our ARPG enemy! --- # Behavior Trees * Behavior Trees are a very powerful technique and widely used in games * Halo 2, for example, used them * Unreal Engine has built-in support for Behavior Trees (there are plugins for Unity) * The tree structure usually allows for visual editing (which Unreal Engine also has built-in) --- # Behavior Trees in Unreal Engine <img src="/PF-3341/assets/img/bteditor.jpg" width="70%"/> --- class: center, middle # Pathfinding --- # Graphs * A graph G = (V,E) consists of *vertices* (nodes) V and *edges* (connections) `\( E \subseteq V \times V \)` * Graphs can be connected, or have multiple components * Graphs can be directed (one-way streets) or undirected * Edges can have weights (costs) associated with them: `\( w: E \mapsto \mathbb{R} \)` * We can represent many things in graphs --- # The (undirected) Pathfinding problem Given a graph G = (V,E), with edge weights w, a start node `\( s \in V \)`, a destination node `\( d \in V \)`, find a sequence of vertices `\( v_1, v_2, \ldots, v_n \)`, such that `\(v_1 = s, v_n = d \)` and `\( \forall i: (v_i, v_{i+1}) \in E \)` We call the sequence `\( v_1, v_2, \ldots, v_n \)` a *path*, and the *cost* of the path is `\( \sum_i w((v_i,v_{i+1})) \)` -- This means what you would expect: To find a path from a start node to a destination node means to find vertices to walk through that lead from the start to the destination by being connected with edges. The cost is the sum of the costs of edges that need to be traversed. --- # Another example: Romania <img src="/CI-2700/assets/img/romania.png" width="100%"/> --- # How could we find a path? <img src="/CI-2700/assets/img/pathproblem.png" width="100%"/> --- class: medium # Uninformed Search - The simplest pathfinding algorithm works like this: - Keep track of which nodes are candidates for expansion (starting with the start node) - Take one of these nodes and expand it - If you reach the target, you have found a path - How do you "keep track" of nodes? - Use a list/queue: You now have "breadth-first search" - Use a stack: You now have "depth-first search" --- # Uninformed Search <img src="/PF-3341/assets/img/bfsdfs.png" width="100%"/> --- class: small # Heuristic Search * What if we can give the path finding algorithm some more information? * For example, we may not know how to drive everywhere, but we can measure the straight line distance * This "extra" information is called a "heuristic" * Search algorithms can use it to "guide" the search process --- # Heuristic Search: General algorithm * We use the same algorithm as above: - Keep track of which nodes are candidates for expansion (starting with the start node) - Take one of these nodes and expand it - If you reach the target, you have found a path * Instead of using a stack or list, we use a *priority queue*, where the nodes are ordered according to some value derived from the heuristic * So how do we determine this value? --- # Greedy Search * Let's use our heuristic! * We order the nodes in the priority queue by heuristic value * Heuristic: straight line distance to Bucharest --- class: tiny # Greedy Search <table><tr><td> <img src="/CI-2700/assets/img/pathproblem.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Arad (366) --- class: tiny # Greedy Search <table><tr><td> <img src="/CI-2700/assets/img/greedy/1.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Sibiu (253), Timisoara (329), Zerind (374) --- class: tiny # Greedy Search <table><tr><td> <img src="/CI-2700/assets/img/greedy/2.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Fagaras (176), Rimnicu Vilcea (193), Timisoara (329), Zerind (374), Oradea (380) --- class: tiny # Greedy Search <table><tr><td> <img src="/CI-2700/assets/img/greedy/3.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Bucharest (0), Rimnicu Vilcea (193), Timisoara (329), Zerind (374), Oradea (380) --- # A* Search * Greedy search sometimes does not give us the optimal result * It tries to get to the goal as fast as possible, but ignores the cost of actually getting to each node * Idea: Instead of using the node with the lowest heuristic value, use the node with the lowest sum of heuristic value and cost to get to * This is called A* search --- class: tiny # A* Search <table><tr><td> <img src="/CI-2700/assets/img/pathproblem.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Arad (0 + 366) --- class: tiny # A* Search <table><tr><td> <img src="/CI-2700/assets/img/as/1.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Sibiu (140 + 253), Timisoara (118 + 329), Zerind (75 + 374) --- class: tiny # A* Search <table><tr><td> <img src="/CI-2700/assets/img/as/2.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Rimnicu Vilcea (220 + 193), Fagaras (239 + 176), Timisoara (118 + 329), Zerind (75 + 374), Orodea (291 + 380) --- class: tiny # A* Search <table><tr><td> <img src="/CI-2700/assets/img/as/3.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Fagaras (239 + 176), Pitesti (317 + 100), Timisoara (118 + 329), Zerind (75 + 374), Craiova (366 + 160), Orodea (291 + 380) --- class: tiny # A* Search <table><tr><td> <img src="/CI-2700/assets/img/as/4.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Pitesti (317 + 100), Timisoara (118 + 329), Zerind (75 + 374), Bucharest (450 + 0), Craiova (366 + 160), Orodea (291 + 380) --- class: tiny # A* Search <table><tr><td> <img src="/CI-2700/assets/img/as/5.png" width="90%"/></td><td> Heuristic:<br/> <table> <tr><td>Arad</td><td>366</td></tr> <tr><td>Bucharest</td><td>0</td></tr> <tr><td>Craiova</td><td>160</td></tr> <tr><td>Drobeta</td><td>242</td></tr> <tr><td>Eforie</td><td>161</td></tr> <tr><td>Fagaras</td><td>176</td></tr> <tr><td>Giurgiu</td><td>77</td></tr> <tr><td>Hirsova</td><td>151</td></tr> <tr><td>Iasi</td><td>226</td></tr> <tr><td>Lugoj</td><td>244</td></tr> <tr><td>Mehadia</td><td>241</td></tr> <tr><td>Neamt</td><td>234</td></tr> <tr><td>Oradea</td><td>380</td></tr> <tr><td>Pitesti</td><td>100</td></tr> <tr><td>Rimnicu Vilcea</td><td>193</td></tr> <tr><td>Sibiu</td><td>253</td></tr> <tr><td>Timisoara</td><td>329</td></tr> <tr><td>Urziceni</td><td>80</td></tr> <tr><td>Vaslui</td><td>199</td></tr> <tr><td>Zerind</td><td>374</td></tr> </table> </td></tr></table> Frontier: Bucharest (418 + 0), Timisoara (118 + 329), Zerind (75 + 374), Craiova (366 + 160), Orodea (291 + 380) --- class: small # A* Search * To find *optimal* solution, keep expanding nodes until the goal node is the best node in the frontier * A* is actually guaranteed to find the optimal solution if the heuristic is: - Admissible: Never overestimate the cost - Consistent: For a node x and its neighbor y, the heuristic value for x has to be less than or equal to that of y plus the cost of getting from x to y * You can also reduce the memory requirements of A* by using Iterative Deepening: - Limit search to a particular depth - If no path is found, increase the limit --- # Dijkstra's algorithm * You may have heard of Dijkstra's algorithm (and its variants) before * Dijkstra's algorithm is basically A* without using the heuristic * In some popular formulations you also let the algorithm compute a path for *every* possible destination * This will give you a shortest path tree, which may be useful if you have to repeatedly find a path to different destinations --- class: small # Search * While we have looked at finding paths in physical spaces so far, there are many other applications * Take, for example, Super Mario * An AI could play the game using A* <img src="/CI-2700/assets/img/marioas.png" width="50%"/> --- # Applications - A* is widely applied in games - Unity's built-in navigation module uses A* - But how do you apply A* to a 3D world? - We need a graph! - Idea: Divide the game world into regions, and assign each region a graph node --- # Example: World of Warcraft <img src="/CI-2700/assets/img/wowhalaaview.png" width="80%"/> --- # Example: World of Warcraft <img src="/CI-2700/assets/img/wowhalaa.jpg" width="80%"/> --- # Example: World of Warcraft <img src="/CI-2700/assets/img/wowhalaa2.jpg" width="80%"/> --- # Example: World of Warcraft <img src="/CI-2700/assets/img/wowhalaa3.jpg" width="80%"/> --- # Example: World of Warcraft <img src="/CI-2700/assets/img/wowhalaa4.jpg" width="80%"/> --- class: center, middle # Utility-based AI --- # Utility * Sometimes we can just assign a numerical value ("score") to the observations, and then combine these scores in some way to get a decision * For example, we can assign a score to the distance from the player, the agent's health, maybe their remaining mana, etc. * Then, we can calculate a score for a melee attack by *weighing* the distance as more significant than the health, and mana being irrelevant * On the other hand, the score for a fireball would be more affected by the remaining mana and less by the distance (up to a threshold, perhaps) * The agent then simply picks the action with the highest score/utility --- # Utility: Our ARPG enemy Three options: melee, fireball or run away $$ u_m = 0.8 \cdot d + 0.2 \cdot h + 0 \cdot m \\\\ u_f = 0.4 \cdot d + 0.2 \cdot h + 0.4 \cdot m \\\\ u_r = 0.4 \cdot d + 0.6 \cdot h + 0 \cdot m $$ We are 80 units away from the player, have 90% health and 100% mana. What do we do? -- At which distance would we attack the player? -- We need to define the scores! Let's say $$ d = \frac{80}{\mathit{distance} + 80}, h = \frac{\mathit{health}}{100}, m = \frac{\mathit{mana}}{100} $$ --- class: small # Utility: Advantages and Limitations * The main advantage of this utility-based approach is that it is easy to extend * If a new action becomes available: assign a scoring function to it, and the agent will automatically consider it * If a new kind of observation becomes available: add it to the scoring functions where it is relevant * Drawback: The scaling of the utility scores needs to be consistent (often easiest achieved by normalizing them to be between 0 and 1) * Another drawback: Determining the formulas for each action/option is non-trivial, especially when they have many terms --- class: small # Utility: Pathfinding * A utility-based approach can also be used for pathfinding * Assign a utility value to each space in the game * The goal has (very) high utility * Obstacles have negative utility * Each of these utility values is actually a field of values * The total utility of the space is the sum of these fields --- # Potential Fields <img src="/PF-3341/assets/img/pffig2c.png" width="80%"/> --- # Potential Fields <img src="/PF-3341/assets/img/pffig3.png" width="80%"/> --- # Potential Fields <img src="/PF-3341/assets/img/pffig12a.png" width="80%"/> --- # Flow Fields <img src="/PF-3341/assets/img/flowfield.png" width="80%"/> --- class: small # Potential Fields * Potential (and Flow) Fields can be a very efficient way to find paths in large and complex environments * Local optima are a big problem. Potential solutions: - Save the trail of the unit to avoid revisiting the same location - Make obstacles convex (virtually) - Use a forward simulation and use some other pathfinding algorithm to find a way out of local optima * It is easy to combine them with strategic decision making: add more utility to higher-priority targets, add more negative utility to dangerous areas, etc. * Scaling and tweaking can still be challenging --- class: small # Utility: Task Assignment * Say we have StarCraft, a real-time strategy game * The AI agent controls a number of squads of different units * There are several possible targets for each squad to attack * Let's assign a utility value for each combination of squad and target! * Utility values can be zero (e.g. the squad and the target both die), or maybe even negative (trying to attack airborne Wraiths with ground-only Zerglings) * We want all targets to be attacked --- # Utility: Task Assignment * To assign squads to targets, we calculate the utility of a particular assignment as the sum of all individual utilities * For example, if squad 1 attacking target 1 has utility 0.4, and squad 2 attacking target 2 has utility 0.1, the total utility is 0.5 * We calculate these utilities for *all* possible assignments * Then we pick the assignment with the highest total utility --- # Another Task Assignment Example * Instead of squads of units we have students * Instead of targets to attack we have papers to present * And y'all sent me the utility values ... * Papers were assigned to maximize total utility --- # Paper Task Assignment ```Haskell u 1 1 = 1.0 u 1 3 = 0.66 u 1 6 = 0.33 u 1 _ = -1.0 utility :: [(Int,Int)] -> Double utility assignments = sum $ map (uncurry u) assignments makeAssignment :: [Int] -> [Int] -> [(Int,Int)] makeAssignment students topics = maximumBy (comparing utility) assignments where assignments = map (zip students) $ permutations topics ``` ([Source](/PF-3341/assets/img/assign.hs)) See any problem? -- Here's a bad word for you: **permutations** --- class: small # Another Task Assignment Example * 13 topics means 13! possible assignments * 13! = 6 227 020 800 * Even an optimized build of the assignment program takes a while to run * Several solutions: - We know/can guess that several assignments are not going to be good - We may be able to reduce the problem in other ways (some papers were only picked by one student as their first choice) - Randomly pick a few options and choose the best of those (may be "good enough" for many games) - Pick an assignment and incrementally improve it - Use some optimization technique --- class: center, middle # Game Trees --- # Adversaries? * So far we have only looked at making decisions based on our own plans * What if the other player can make several different decisions in response to our action? * For example, how can we play chess, accounting for what the opponent will do? * Adversarial search! --- class: small # Minimax * Let's say we want to get the highest possible score * Then our opponent wants us to get the lowest possible score * For each of our potential actions, we look at each of the opponents possible actions * The opponent will pick the action that gives us the lowest score, and we will pick from our actions the one where the opponent's choice gives us the highest score * How does the opponent decide what to pick? The same way! --- # Minimax <svg xmlns:dc="http://purl.org/dc/elements/1.1/" xmlns:cc="http://web.resource.org/cc/" xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#" xmlns:svg="http://www.w3.org/2000/svg" xmlns="http://www.w3.org/2000/svg" width="701" height="410" id="svg2" version="1.0"> <defs id="defs4" /> <sodipodi:namedview id="base" pagecolor="#ffffff" bordercolor="#666666" borderopacity="1.0" gridtolerance="10000" guidetolerance="10" objecttolerance="10" showgrid="true" /> <metadata id="metadata7"> <rdf:RDF> <cc:Work rdf:about=""> <dc:format>image/svg+xml</dc:format> <dc:type rdf:resource="http://purl.org/dc/dcmitype/StillImage" /> </cc:Work> </rdf:RDF> </metadata> <g id="layer1" transform="translate(-38.99998,-322.3622)"> <g id="g7309" style="fill:#e3e3e3;fill-opacity:1"> <path transform="translate(129,-9)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path1874" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="411.36218" x="261" height="50" width="50" id="rect2762" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="411.36218" x="561" height="50" width="50" id="rect2764" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(-99,169)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2770" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(51,169)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2772" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(211,169)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2774" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(369,169)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2776" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="119" height="50" width="50" id="rect2778" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="241" height="50" width="50" id="rect2780" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="331" height="50" width="50" id="rect2782" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="431" height="50" width="50" id="rect2784" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="551" height="50" width="50" id="rect2788" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="649" height="50" width="50" id="rect2790" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(-189,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2794" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(-131,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2796" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(-39,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2798" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(51,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2800" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(121,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2802" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(179,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2804" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(271,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2806" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(339,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2808" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(401,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2810" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> </g> <g id="g7333"> <path id="path2812" d="M 290,402.36218 C 290,402.36218 283.57143,402.36218 433.57143,382.36218 C 573.57143,402.36218 580,402.36218 580,402.36218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path2814" d="M 210,490.36218 C 290,470.36218 285,470.36218 285,470.36218 L 350,490.36218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path2816" d="M 520.5,489.86218 C 585.5,471.29075 585.5,471.29075 585.5,471.29075 L 670.5,492.00504" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3703" d="M 151.21429,579.86218 C 206.21429,559.86218 205.5,559.86218 205.5,559.86218 L 258.35714,579.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3705" d="M 462.5,579.86218 C 517.5,559.86218 516.78571,559.86218 516.78571,559.86218 L 569.64285,579.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3707" d="M 119.50001,670.14789 C 146.64286,651.57647 146.64286,650.86218 146.64286,650.86218 L 170.21429,670.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3709" d="M 428.78572,670.14789 C 455.92857,651.57647 455.92857,650.86218 455.92857,650.86218 L 479.5,670.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3711" d="M 648.78572,670.14789 C 675.92857,651.57647 675.92857,650.86218 675.92857,650.86218 L 699.5,670.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3713" d="M 266,650.36218 L 266,670.36218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3715" d="M 355.5,650.86218 L 355.5,670.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3717" d="M 574.57143,650.07647 L 574.57143,670.07647" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3719" d="M 675.5,560.86218 L 675.5,580.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3721" d="M 354.5,559.86218 L 354.5,579.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> </g> <g id="g7348" style="stroke:black;stroke-opacity:1"> <path id="path4608" d="M 739.5,392.86218 C 39.5,392.86218 39.5,392.86218 39.5,392.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:1;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:6, 6;stroke-dashoffset:0;stroke-opacity:1" /> <path id="path6380" d="M 739.5,482.86218 C 39.5,482.86218 39.5,482.86218 39.5,482.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:1;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:6, 6;stroke-dashoffset:0;stroke-opacity:1" /> <path id="path6382" d="M 739.5,572.86218 C 39.50004,572.86218 39.50004,572.86218 39.50004,572.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:1;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:6, 6;stroke-dashoffset:0;stroke-opacity:1" /> <path id="path6384" d="M 739.5,662.86218 C 39.49998,662.86218 39.49998,662.86218 39.49998,662.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:1;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:6, 6;stroke-dashoffset:0;stroke-opacity:1" /> </g> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="38.339844" y="361.8739" id="text6386"><tspan id="tspan6388" x="38.339844" y="361.8739" style="font-size:40px;font-family:Arial">0</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="38.339844" y="451.8739" id="text6390"><tspan id="tspan6392" x="38.339844" y="451.8739" style="font-size:40px;font-family:Arial">1</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="38.339844" y="541.8739" id="text6394"><tspan id="tspan6396" x="38.339844" y="541.8739" style="font-size:40px;font-family:Arial">2</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="38.339844" y="631.8739" id="text6398"><tspan id="tspan6400" x="38.339844" y="631.8739" style="font-size:40px;font-family:Arial">3</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="40.339844" y="721.8739" id="text6402"><tspan id="tspan6404" x="40.339844" y="721.8739" style="font-size:40px;font-family:Arial">4</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="155.73828" y="715.85046" id="text1925"><tspan id="tspan1927" x="155.73828" y="715.85046" style="font-size:28px">+∞</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="98.951172" y="715.02039" id="text1929"><tspan id="tspan1931" x="98.951172" y="715.02039" style="font-size:28px;font-family:Arial">10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="256.95117" y="716.02039" id="text1933"><tspan id="tspan1935" x="256.95117" y="716.02039" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="336.18164" y="714.48718" id="text1937"><tspan id="tspan1939" x="336.18164" y="714.48718" style="font-size:28px;font-family:Arial">-10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="418.95117" y="716.02039" id="text1941"><tspan id="tspan1943" x="418.95117" y="716.02039" style="font-size:28px;font-family:Arial">7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="476.95117" y="716.02039" id="text1945"><tspan id="tspan1947" x="476.95117" y="716.02039" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="690.18164" y="716.48718" id="text1949"><tspan id="tspan1951" x="690.18164" y="716.48718" style="font-size:28px;font-family:Arial">-5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="630.95117" y="716.02039" id="text1953"><tspan id="tspan1955" x="630.95117" y="716.02039" style="font-size:28px;font-family:Arial">-7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="560.44141" y="715.60242" id="text1957"><tspan id="tspan1959" x="560.44141" y="715.60242" style="font-size:28px;font-family:Arial">-∞</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="126.18164" y="624.48718" id="text1961"><tspan id="tspan1963" x="126.18164" y="624.48718" style="font-size:28px;font-family:Arial">10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="257.54883" y="626.13171" id="text1965"><tspan id="tspan1967" x="257.54883" y="626.13171" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="334.83008" y="626.48718" id="text1969"><tspan id="tspan1971" x="334.83008" y="626.48718" style="font-size:28px;font-family:Arial">-10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="659.11133" y="626.36218" id="text1981"><tspan id="tspan1983" x="659.11133" y="626.36218" style="font-size:28px;font-family:Arial">-7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="190.18164" y="536.48718" id="text1985"><tspan id="tspan1987" x="190.18164" y="536.48718" style="font-size:28px;font-family:Arial">10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="334.83008" y="534.48718" id="text1989"><tspan id="tspan1991" x="334.83008" y="534.48718" style="font-size:28px;font-family:Arial">-10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="559.11133" y="627.66687" id="text1997"><tspan id="tspan1999" x="559.11133" y="627.66687" style="font-size:28px;font-family:Arial">-∞</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="659.11133" y="536.36218" id="text2009"><tspan id="tspan2011" x="659.11133" y="536.36218" style="font-size:28px;font-family:Arial">-7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="571.11133" y="446.36218" id="text2013"><tspan id="tspan2015" x="571.11133" y="446.36218" style="font-size:28px;font-family:Arial">-7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="266.83008" y="444.48718" id="text2017"><tspan id="tspan2019" x="266.83008" y="444.48718" style="font-size:28px;font-family:Arial">-10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="448.83789" y="626.02039" id="text2021"><tspan id="tspan2023" x="448.83789" y="626.02039" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="507.41177" y="535.82721" id="text2025"><tspan id="tspan2027" x="507.41177" y="535.82721" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="419.11133" y="356.36218" id="text4034"><tspan id="tspan4036" x="419.11133" y="356.36218" style="font-size:28px;font-family:Arial">-7</tspan></text> </g> </svg> --- # Minimax <svg xmlns:dc="http://purl.org/dc/elements/1.1/" xmlns:cc="http://web.resource.org/cc/" xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#" xmlns:svg="http://www.w3.org/2000/svg" xmlns="http://www.w3.org/2000/svg" width="701" height="410" id="svg2" version="1.0"> <defs id="defs4" /> <metadata id="metadata7"> <rdf:RDF> <cc:Work rdf:about=""> <dc:format>image/svg+xml</dc:format> <dc:type rdf:resource="http://purl.org/dc/dcmitype/StillImage" /> </cc:Work> </rdf:RDF> </metadata> <g id="layer1" transform="translate(-38.99998,-322.3622)"> <g id="g7309" style="fill:#e3e3e3;fill-opacity:1"> <path transform="translate(129,-9)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path1874" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="411.36218" x="261" height="50" width="50" id="rect2762" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="411.36218" x="561" height="50" width="50" id="rect2764" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(-99,169)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2770" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(51,169)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2772" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(211,169)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2774" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(369,169)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2776" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="119" height="50" width="50" id="rect2778" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="241" height="50" width="50" id="rect2780" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="331" height="50" width="50" id="rect2782" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="431" height="50" width="50" id="rect2784" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="551" height="50" width="50" id="rect2788" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="591.36218" x="649" height="50" width="50" id="rect2790" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(-189,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2794" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(-131,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2796" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(-39,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2798" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(51,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2800" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(121,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2802" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(179,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2804" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(271,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2806" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(339,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2808" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path transform="translate(401,349)" d="M 330 357.36218 A 25 25 0 1 1 280,357.36218 A 25 25 0 1 1 330 357.36218 z" id="path2810" style="fill:#e3e3e3;fill-opacity:1;stroke:black;stroke-width:2;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> </g> <g id="g7333"> <path id="path2812" d="M 290,402.36218 C 290,402.36218 283.57143,402.36218 433.57143,382.36218 C 573.57143,402.36218 580,402.36218 580,402.36218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path2814" d="M 210,490.36218 C 290,470.36218 285,470.36218 285,470.36218 L 350,490.36218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path2816" d="M 520.5,489.86218 C 585.5,471.29075 585.5,471.29075 585.5,471.29075 L 670.5,492.00504" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3703" d="M 151.21429,579.86218 C 206.21429,559.86218 205.5,559.86218 205.5,559.86218 L 258.35714,579.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3705" d="M 462.5,579.86218 C 517.5,559.86218 516.78571,559.86218 516.78571,559.86218 L 569.64285,579.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3707" d="M 119.50001,670.14789 C 146.64286,651.57647 146.64286,650.86218 146.64286,650.86218 L 170.21429,670.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3709" d="M 428.78572,670.14789 C 455.92857,651.57647 455.92857,650.86218 455.92857,650.86218 L 479.5,670.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3711" d="M 648.78572,670.14789 C 675.92857,651.57647 675.92857,650.86218 675.92857,650.86218 L 699.5,670.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3713" d="M 266,650.36218 L 266,670.36218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3715" d="M 355.5,650.86218 L 355.5,670.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3717" d="M 574.57143,650.07647 L 574.57143,670.07647" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3719" d="M 675.5,560.86218 L 675.5,580.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path3721" d="M 354.5,559.86218 L 354.5,579.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:2;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> </g> <g id="g7348" style="stroke:black;stroke-opacity:1"> <path id="path4608" d="M 739.5,392.86218 C 39.5,392.86218 39.5,392.86218 39.5,392.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:1;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:6, 6;stroke-dashoffset:0;stroke-opacity:1" /> <path id="path6380" d="M 739.5,482.86218 C 39.5,482.86218 39.5,482.86218 39.5,482.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:1;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:6, 6;stroke-dashoffset:0;stroke-opacity:1" /> <path id="path6382" d="M 739.5,572.86218 C 39.50004,572.86218 39.50004,572.86218 39.50004,572.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:1;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:6, 6;stroke-dashoffset:0;stroke-opacity:1" /> <path id="path6384" d="M 739.5,662.86218 C 39.49998,662.86218 39.49998,662.86218 39.49998,662.86218" style="fill:none;fill-rule:evenodd;stroke:black;stroke-width:1;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:6, 6;stroke-dashoffset:0;stroke-opacity:1" /> </g> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="38.339844" y="361.8739" id="text6386"><tspan id="tspan6388" x="38.339844" y="361.8739" style="font-size:40px;font-family:Arial">0</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="38.339844" y="451.8739" id="text6390"><tspan id="tspan6392" x="38.339844" y="451.8739" style="font-size:40px;font-family:Arial">1</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="38.339844" y="541.8739" id="text6394"><tspan id="tspan6396" x="38.339844" y="541.8739" style="font-size:40px;font-family:Arial">2</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="38.339844" y="631.8739" id="text6398"><tspan id="tspan6400" x="38.339844" y="631.8739" style="font-size:40px;font-family:Arial">3</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="40.339844" y="721.8739" id="text6402"><tspan id="tspan6404" x="40.339844" y="721.8739" style="font-size:40px;font-family:Arial">4</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="155.73828" y="715.85046" id="text1925"><tspan id="tspan1927" x="155.73828" y="715.85046" style="font-size:28px">+∞</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="98.951172" y="715.02039" id="text1929"><tspan id="tspan1931" x="98.951172" y="715.02039" style="font-size:28px;font-family:Arial">10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="256.95117" y="716.02039" id="text1933"><tspan id="tspan1935" x="256.95117" y="716.02039" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="336.18164" y="714.48718" id="text1937"><tspan id="tspan1939" x="336.18164" y="714.48718" style="font-size:28px;font-family:Arial">-10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="418.95117" y="716.02039" id="text1941"><tspan id="tspan1943" x="418.95117" y="716.02039" style="font-size:28px;font-family:Arial">7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="476.95117" y="716.02039" id="text1945"><tspan id="tspan1947" x="476.95117" y="716.02039" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="690.18164" y="716.48718" id="text1949"><tspan id="tspan1951" x="690.18164" y="716.48718" style="font-size:28px;font-family:Arial">-5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="630.95117" y="716.02039" id="text1953"><tspan id="tspan1955" x="630.95117" y="716.02039" style="font-size:28px;font-family:Arial">-7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="560.44141" y="715.60242" id="text1957"><tspan id="tspan1959" x="560.44141" y="715.60242" style="font-size:28px;font-family:Arial">-∞</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="126.18164" y="624.48718" id="text1961"><tspan id="tspan1963" x="126.18164" y="624.48718" style="font-size:28px;font-family:Arial">10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="257.54883" y="626.13171" id="text1965"><tspan id="tspan1967" x="257.54883" y="626.13171" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="334.83008" y="626.48718" id="text1969"><tspan id="tspan1971" x="334.83008" y="626.48718" style="font-size:28px;font-family:Arial">-10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="659.11133" y="626.36218" id="text1981"><tspan id="tspan1983" x="659.11133" y="626.36218" style="font-size:28px;font-family:Arial">-7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="190.18164" y="536.48718" id="text1985"><tspan id="tspan1987" x="190.18164" y="536.48718" style="font-size:28px;font-family:Arial">10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="334.83008" y="534.48718" id="text1989"><tspan id="tspan1991" x="334.83008" y="534.48718" style="font-size:28px;font-family:Arial">-10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="559.11133" y="627.66687" id="text1997"><tspan id="tspan1999" x="559.11133" y="627.66687" style="font-size:28px;font-family:Arial">-∞</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="659.11133" y="536.36218" id="text2009"><tspan id="tspan2011" x="659.11133" y="536.36218" style="font-size:28px;font-family:Arial">-7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="571.11133" y="446.36218" id="text2013"><tspan id="tspan2015" x="571.11133" y="446.36218" style="font-size:28px;font-family:Arial">-7</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="266.83008" y="444.48718" id="text2017"><tspan id="tspan2019" x="266.83008" y="444.48718" style="font-size:28px;font-family:Arial">-10</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="448.83789" y="626.02039" id="text2021"><tspan id="tspan2023" x="448.83789" y="626.02039" style="font-size:28px;font-family:Arial">5</tspan></text> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="507.41177" y="535.82721" id="text2025"><tspan id="tspan2027" x="507.41177" y="535.82721" style="font-size:28px;font-family:Arial">5</tspan></text> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 109.78299,676.61979 C 108.76217,669.18889 112.83152,657.11102 122.56091,648.79111" id="path2029" /> <path sodipodi:type="star" style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path3987" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.541426,-0.840748,0.840748,-0.541426,-396.4152,953.1478)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 139.28753,583.38756 C 139.56041,571.20959 160.19175,548.97904 172.2685,541.66929" id="path3989" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path3991" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.492129,-0.870521,0.870521,-0.492129,-363.6836,815.2334)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 249.59321,681.9418 C 245.54194,674.5109 244.05545,665.21095 248.73407,652.85043" id="path3994" /> <path sodipodi:type="star" style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path3996" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.935654,-0.352918,0.352918,-0.935654,12.9768,1206.056)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 338.26132,680.86218 C 334.21005,673.43128 332.72356,664.13133 337.40218,651.77081" id="path3998" /> <path sodipodi:type="star" style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4000" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.935654,-0.352918,0.352918,-0.935654,101.6449,1204.976)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 558.52771,681.05052 C 554.47644,673.61962 552.98995,664.31967 557.66857,651.95915" id="path4002" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4004" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.935654,-0.352918,0.352918,-0.935654,321.9113,1205.164)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 335.26132,585.86218 C 331.21005,578.43128 329.72356,569.13133 334.40218,556.77081" id="path4006" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4008" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.935654,-0.352918,0.352918,-0.935654,98.64494,1109.977)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 654.96827,586.52197 C 650.917,579.09107 649.43051,569.79112 654.10913,557.4306" id="path4010" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4012" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.935654,-0.352918,0.352918,-0.935654,418.3519,1110.636)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 450.52072,581.545 C 450.7936,569.36703 468.89956,549.66186 480.97631,542.35211" id="path4014" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4016" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.492129,-0.870521,0.870521,-0.492129,-54.45041,815.3909)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 490.34703,676.48754 C 491.36785,669.05664 487.2985,656.97877 477.56911,648.65886" id="path4018" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4020" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(0.541426,-0.840748,-0.840748,-0.541426,996.545,953.0155)"/> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 637.56152,677.4875 C 636.5407,670.0566 640.61005,657.97873 650.33944,649.65882" id="path4022"/> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4024" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-0.541426,-0.840748,0.840748,-0.541426,131.3633,954.0155)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 358.47931,492.86218 C 358.20643,480.68421 337.57509,458.45366 325.49834,451.14391" id="path4026"/> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4028" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(0.492129,-0.870521,-0.870521,-0.492129,861.4504,724.708)" /> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 678.47931,495.21933 C 678.78296,486.10484 664.71311,461.16459 623.35548,444.92963" id="path4030" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4032" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(0.274369,-0.961623,-0.961623,-0.274369,1209.226,586.0477)" /> <text xml:space="preserve" style="font-size:12px;font-style:normal;font-weight:normal;fill:black;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" x="419.11133" y="356.36218" id="text4034"><tspan id="tspan4036" x="419.11133" y="356.36218" style="font-size:28px;font-family:Arial">-7</tspan></text> <path style="fill:none;fill-rule:evenodd;stroke:red;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;marker-end:none;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 588.49649,403.67797 C 577.79949,367.41544 520.08418,351.37085 471.94409,348.38828" id="path4038" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:red;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4040" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-5.000154e-2,-0.998748,-0.998748,5.000154e-2,1071.611,292.9209)" /> <path style="fill:none;fill-rule:evenodd;stroke:blue;stroke-width:4;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 435,382.36218 C 580,402.36218 580,402.36218 580,402.36218" id="path4042" /> <path style="fill:#e3e3e3;fill-opacity:1;stroke:blue;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-dashoffset:0;stroke-opacity:1" id="path4929" d="M -25.001277,606.12732 L -26.502907,603.96383 L -28.004537,601.80034 L -25.380084,601.58163 L -22.75563,601.36293 L -23.878454,603.74513 L -25.001277,606.12732 z " transform="matrix(-5.902173e-2,1.114682,1.331056,4.942729e-2,-226.3558,400.4239)" /> </g> </svg> --- # Minimax Let's take a game where we "build" a binary number by choosing bits. The number starts with a 1, and each player can choose the next bit in order. The game ends when the number has 6 digits in total (after 5 choices), or if the same bit was chosen twice in a row. If the resulting number is even or prime, we get points equal to the number, otherwise the other player gets that many points. We want to know: What is our best first move assuming the other player plays optimally. --- # Alpha-beta Pruning <svg xmlns:dc="http://purl.org/dc/elements/1.1/" xmlns:cc="http://web.resource.org/cc/" xmlns:rdf="http://www.w3.org/1999/02/22-rdf-syntax-ns#" xmlns:svg="http://www.w3.org/2000/svg" xmlns="http://www.w3.org/2000/svg" xmlns:sodipodi="http://sodipodi.sourceforge.net/DTD/sodipodi-0.dtd" xmlns:inkscape="http://www.inkscape.org/namespaces/inkscape" id="svg2" viewport width="800" height="400" viewBox="0 0 1250 650" version="1.0"> <metadata id="metadata7"> <rdf:RDF> <cc:Work rdf:about=""> <dc:format>image/svg+xml</dc:format> <dc:type rdf:resource="http://purl.org/dc/dcmitype/StillImage" /> </cc:Work> </rdf:RDF> </metadata> <defs id="defs5" /> <sodipodi:namedview inkscape:window-height="721" inkscape:window-width="1024" inkscape:pageshadow="2" inkscape:pageopacity="0.0" borderopacity="1.0" bordercolor="#666666" pagecolor="#ffffff" id="base" inkscape:zoom="0.44194175" inkscape:cx="986.44395" inkscape:cy="208.29432" inkscape:window-x="-4" inkscape:window-y="-4" inkscape:current-layer="g15440" height="615px" width="1212px" /> <g id="g15440" transform="translate(-17.575125,-56.402954)"> <rect y="424.12555" x="23.975124" height="121.01859" width="1200.5581" id="rect2530" style="fill:#fdffde;fill-opacity:1;stroke:none;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="61.202957" x="23.977724" height="121.01859" width="1200.5581" id="rect13672" style="fill:#e3fff9;fill-opacity:1;stroke:none;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="303.10794" x="23.977724" height="121.01859" width="1200.5581" id="rect12797" style="fill:#e4fff9;fill-opacity:1;stroke:none;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="182.14395" x="23.978024" height="121.01859" width="1200.5581" id="rect11922" style="fill:#fdffde;fill-opacity:1;stroke:none;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="545.14673" x="23.977674" height="121.01859" width="1200.5581" id="rect5439" style="fill:#e4fff9;fill-opacity:1;stroke:none;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8549" d="M 576.3913,259.87103 L 655.1936,350.64322" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8551" d="M 575.3938,219.97117 L 575.3938,122.21651 L 309.0623,217.97618" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path sodipodi:nodetypes="cc" id="path8553" d="M 574.3963,121.21901 L 843.4888,216.74703" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8555" d="M 223.9205,385.30263 L 146.1157,480.06481" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8557" d="M 161.6951,498.43972 L 83.8904,593.2019" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8559" d="M 155.9332,591.57177 L 155.9332,497.80709" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:nodetypes="cc" /> <path id="path8561" d="M 229.8372,394.35359 L 307.6419,489.11577" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <g id="g8563" transform="translate(422.2506,-51.0159)"> <g transform="translate(20.398141,179.49717)" id="g8565"> <path id="path8567" d="M -145.14541,373.35629 L -66.343182,467.12097" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <g transform="translate(-774.32493,120.28007)" id="g8569"> <path style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#ff0000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 677.35024,287.051 C 656.40281,307.00093 656.40281,307.00093 656.40281,307.00093" id="path8571" /> <path style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#ff0000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M 680.73133,292.91581 C 659.7839,312.86574 659.7839,312.86574 659.7839,312.86574" id="path8573" /> </g> </g> <path style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M -125.49834,552.84968 L -203.30306,647.61186" id="path8575" /> <path sodipodi:nodetypes="cc" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M -125.16348,645.62853 L -125.16348,551.86385" id="path8577" /> </g> <g id="g8579" transform="translate(409.9115,299.36089)"> <path style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M -109.35352,-45.137149 L -31.548799,49.625031" id="path8581" /> <path style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M -109.89623,-45.137109 L -187.70095,49.625071" id="path8583" /> </g> <path id="path8585" d="M 376.9592,391.22187 L 454.7639,485.98405" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8587" d="M 448.6615,594.2853 L 448.6615,500.52062" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:nodetypes="cc" /> <path id="path8589" d="M 575.3411,350.73121 L 575.3411,256.96653" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:nodetypes="cc" /> <path id="path8591" d="M 643.4202,390.69173 L 722.2224,481.46392" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8593" d="M 705.4487,594.96589 L 705.4487,501.20121" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:nodetypes="cc" /> <path id="path8595" d="M 640.265,504.09656 L 562.4602,598.85874" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8597" d="M 576.7438,389.82808 L 654.5485,484.59026" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8599" d="M 576.2011,389.82812 L 498.3964,484.5903" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8601" d="M 514.247,589.30903 L 514.247,495.54435" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:nodetypes="cc" /> <g id="g8603" transform="translate(681.5853,46.386205)"> <path style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M -42.000484,549.71104 L -42.000484,455.94636" id="path8605" sodipodi:nodetypes="cc" /> <g transform="translate(-710.32318,201.87284)" id="g8607"> <path id="path8609" d="M 677.35024,287.051 C 656.40281,307.00093 656.40281,307.00093 656.40281,307.00093" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#ff0000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8611" d="M 680.73133,292.91581 C 659.7839,312.86574 659.7839,312.86574 659.7839,312.86574" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#ff0000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> </g> </g> <path id="path8613" d="M 773.331,593.83451 L 773.331,500.06983" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:nodetypes="cc" /> <path id="path8615" d="M 773.331,482.96017 L 773.331,389.19549" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:nodetypes="cc" /> <path id="path8617" d="M 848.4372,260.85183 L 770.6325,355.61401" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <g id="g8619" transform="translate(893.7082,-192.7573)"> <path style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" d="M -42.000484,549.71104 L -42.000484,455.94636" id="path8621" sodipodi:nodetypes="cc" /> <g transform="translate(-710.32318,201.87284)" id="g8623"> <path id="path8625" d="M 677.35024,287.051 C 656.40281,307.00093 656.40281,307.00093 656.40281,307.00093" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#ff0000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8627" d="M 680.73133,292.91581 C 659.7839,312.86574 659.7839,312.86574 659.7839,312.86574" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#ff0000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> </g> </g> <path id="path8629" d="M 848.6891,390.75113 L 927.4913,484.51581" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path sodipodi:nodetypes="cc" id="path8631" d="M 851.7077,485.22291 L 851.7077,391.45823" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8633" d="M 848.4062,504.24176 L 927.2085,598.00644" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path sodipodi:nodetypes="cc" id="path8635" d="M 851.4249,598.71354 L 851.4249,504.94886" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <path id="path8637" d="M 921.7374,504.59531 L 1000.5396,598.35999" style="fill:none;fill-opacity:0.75;fill-rule:evenodd;stroke:#000000;stroke-width:3;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <rect y="581.99335" x="961.10736" height="52.105621" width="52.105568" id="rect6874" style="fill:#c8c8c8;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6876" y="621.05231" x="975.08246" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05231" x="975.08246" id="tspan6878" sodipodi:role="line">6</tspan></text> <rect y="581.99335" x="889.83099" height="52.105621" width="52.105568" id="rect6863" style="fill:#c8c8c8;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6865" y="621.05231" x="903.80609" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05231" x="903.80609" id="tspan6867" sodipodi:role="line">8</tspan></text> <rect y="581.99335" x="825.26862" height="52.105621" width="52.105568" id="rect6852" style="fill:#c8c8c8;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6854" y="621.05231" x="839.24384" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05231" x="839.24384" id="tspan6856" sodipodi:role="line">9</tspan></text> <rect y="581.99335" x="750.08246" height="52.105621" width="52.105568" id="rect6775" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6777" y="621.05231" x="764.05768" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05231" x="764.05768" id="tspan6779" sodipodi:role="line">5</tspan></text> <rect y="581.99335" x="679.39581" height="52.105621" width="52.105568" id="rect6764" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6766" y="621.05231" x="693.37103" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05231" x="693.37103" id="tspan6768" sodipodi:role="line">7</tspan></text> <rect y="581.99335" x="614.90765" height="52.105621" width="52.105568" id="rect6731" style="fill:#c8c8c8;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6733" y="621.05231" x="628.88287" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05231" x="628.88287" id="tspan6735" sodipodi:role="line">9</tspan></text> <rect y="581.99335" x="549.92242" height="52.105621" width="52.105568" id="rect6709" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6711" y="621.05231" x="563.89752" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05231" x="563.89752" id="tspan6713" sodipodi:role="line">6</tspan></text> <rect y="581.99335" x="489.32553" height="52.105621" width="52.105568" id="rect6698" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6700" y="621.05231" x="503.30075" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05231" x="503.30075" id="tspan6702" sodipodi:role="line">6</tspan></text> <rect y="581.99335" x="423.65353" height="52.105621" width="52.105568" id="rect6654" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6656" y="621.05237" x="437.62863" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05237" x="437.62863" id="tspan6658" sodipodi:role="line">3</tspan></text> <rect y="581.99335" x="341.11606" height="52.105621" width="52.105568" id="rect6610" style="fill:#c8c8c8;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6612" y="621.05237" x="355.09116" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05237" x="355.09116" id="tspan6614" sodipodi:role="line">2</tspan></text> <rect y="581.99335" x="272.10239" height="52.105621" width="52.105568" id="rect6599" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6601" y="621.05237" x="286.07761" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05237" x="286.07761" id="tspan6603" sodipodi:role="line">4</tspan></text> <rect y="581.99335" x="204.22017" height="52.105621" width="52.105568" id="rect6588" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6590" y="621.05237" x="218.19527" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05237" x="218.19527" id="tspan6592" sodipodi:role="line">7</tspan></text> <rect y="581.99341" x="131.67097" height="52.105621" width="52.105568" id="rect6577" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6579" y="621.05243" x="145.64619" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05243" x="145.64619" id="tspan6581" sodipodi:role="line">6</tspan></text> <rect y="581.99335" x="65.061478" height="52.105621" width="52.105568" id="rect6555" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6557" y="621.05237" x="79.036697" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="621.05237" x="79.036697" id="tspan6559" sodipodi:role="line">5</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,750.0897,224.15251)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6841" style="fill:#c8c8c8;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6843" y="500.73938" x="911.13641" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73938" x="911.13641" id="tspan6845" sodipodi:role="line">6</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,681.0761,224.15251)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6830" style="fill:#c8c8c8;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6832" y="500.73932" x="842.12286" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73932" x="842.12286" id="tspan6834" sodipodi:role="line">8</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,602.4458,224.15251)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6786" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6788" y="500.73938" x="763.49261" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73938" x="763.49261" id="tspan6790" sodipodi:role="line">5</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,533.4322,224.15251)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6753" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6755" y="500.73932" x="694.47894" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73932" x="694.47894" id="tspan6757" sodipodi:role="line">7</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,468.096,224.15251)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6720" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6722" y="500.73932" x="629.14276" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73932" x="629.14276" id="tspan6724" sodipodi:role="line">6</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,343.4481,224.15251)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6687" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6689" y="500.73938" x="504.49484" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73938" x="504.49484" id="tspan6691" sodipodi:role="line">6</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,277.7764,224.1525)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6643" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6645" y="500.73932" x="438.82321" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73932" x="438.82321" id="tspan6647" sodipodi:role="line">3</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,127.709,224.15251)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6632" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6634" y="500.73938" x="288.75571" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73938" x="288.75571" id="tspan6636" sodipodi:role="line">4</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,-16.414,224.15201)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6566" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6568" y="500.73883" x="144.63277" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="500.73883" x="144.63277" id="tspan6570" sodipodi:role="line">5</tspan></text> <rect y="349.55655" x="826.01788" height="52.105621" width="52.105568" id="rect6819" style="fill:#c8c8c8;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6821" y="388.61554" x="839.9931" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="388.61554" x="839.9931" id="tspan6823" sodipodi:role="line">8</tspan></text> <rect y="349.55655" x="750.08246" height="52.105621" width="52.105568" id="rect6797" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6799" y="388.61554" x="764.05768" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="388.61554" x="764.05768" id="tspan6801" sodipodi:role="line">5</tspan></text> <rect y="349.55655" x="627.90021" height="52.105621" width="52.105568" id="rect6742" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6744" y="388.61554" x="641.87531" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="388.61554" x="641.87531" id="tspan6746" sodipodi:role="line">7</tspan></text> <rect y="349.55655" x="550.90021" height="52.105621" width="52.105568" id="rect6676" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6678" y="388.61554" x="564.87531" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="388.61554" x="564.87531" id="tspan6680" sodipodi:role="line">6</tspan></text> <rect y="349.55655" x="350.58652" height="52.105621" width="52.105568" id="rect6621" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6623" y="388.61557" x="364.56161" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="388.61557" x="364.56161" id="tspan6625" sodipodi:role="line">3</tspan></text> <rect y="349.55655" x="196.5865" height="52.105621" width="52.105568" id="rect6508" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text6510" y="388.61557" x="210.5616" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="388.61557" x="210.5616" id="tspan6512" sodipodi:role="line">5</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,681.2051,-23.31427)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6808" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6810" y="253.27255" x="842.25177" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="253.27255" x="842.25177" id="tspan6812" sodipodi:role="line">5</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,405.0227,-23.31427)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path6665" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text6667" y="253.27255" x="566.06952" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="253.27255" x="566.06952" id="tspan6669" sodipodi:role="line">6</tspan></text> <path transform="matrix(0.984856,0,0,0.984856,127.709,-23.31427)" d="M 198.90492 268.02789 A 25.392117 25.392117 0 1 1 148.12069,268.02789 A 25.392117 25.392117 0 1 1 198.90492 268.02789 z" sodipodi:ry="25.392117" sodipodi:rx="25.392117" sodipodi:cy="268.02789" sodipodi:cx="173.5128" id="path8732" style="fill:#ffffff;fill-opacity:1;fill-rule:evenodd;stroke:#000000;stroke-width:3.04613066;stroke-linecap:butt;stroke-linejoin:miter;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" sodipodi:type="arc" /> <text id="text8734" y="253.27255" x="288.75571" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="253.27255" x="288.75571" id="tspan8736" sodipodi:role="line">3</tspan></text> <rect y="77.556564" x="550.90021" height="52.105621" width="52.105568" id="rect8738" style="fill:#ffffff;fill-opacity:1;stroke:#000000;stroke-width:3;stroke-miterlimit:4;stroke-dasharray:none;stroke-opacity:1" /> <text id="text8740" y="116.61557" x="564.87531" style="font-size:36.6294136px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="116.61557" x="564.87531" id="tspan8742" sodipodi:role="line">6</tspan></text> <text id="text15420" y="378.97879" x="1151.3359" style="font-size:27.75828552px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="378.97879" x="1151.3359" id="tspan15422" sodipodi:role="line">MAX</tspan></text> <text id="text15432" y="253.88142" x="1151.3359" style="font-size:27.75828552px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="253.88142" x="1151.3359" id="tspan15434" sodipodi:role="line">MIN</tspan></text> <text id="text15436" y="129.20255" x="1151.3359" style="font-size:27.75828552px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="129.20255" x="1151.3359" id="tspan15438" sodipodi:role="line">MAX</tspan></text> <text id="text2221" y="498.00885" x="1151.3362" style="font-size:27.75828552px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="498.00885" x="1151.3362" id="tspan2223" sodipodi:role="line">MIN</tspan></text> <text id="text2225" y="620.87744" x="1151.3362" style="font-size:27.75828552px;font-style:normal;font-weight:normal;fill:#000000;fill-opacity:1;stroke:none;stroke-width:1px;stroke-linecap:butt;stroke-linejoin:miter;stroke-opacity:1;font-family:Bitstream Vera Sans" xml:space="preserve"><tspan y="620.87744" x="1151.3362" id="tspan2227" sodipodi:role="line">MAX</tspan></text> </g> </svg> --- class: small # Alpha-beta pruning * For the max player: Remember the minimum score they will reach in nodes that were already evaluated (alpha) * For the min player: Remember the maximum score they will reach in nodes that were already evaluated (beta) * If beta is less than alpha, stop evaluating the subtree * Example: If the max player can reach 5 points by choosing the left subtree, and the min player finds an action in the right subtree that results in 4 points, they can stop searching. * If the right subtree was reached, the min player could choose the action that results in 4 points, therefore the max player will never choose the right subtree, because they can get 5 points in the left one --- class: small # Minimax: Limitations * The tree for our mini game was quite large * Imagine one for chess * Even with Alpha-Beta pruning it's impossible to evaluate all nodes * Use a guess! For example: Board value after 3 turns * What about unknown information (like a deck that is shuffled)? --- class: center, middle # Presenting and Discussing Research Papers --- class: small # The Structure of a Paper * Introduction: What is the problem and why is it relevant? * Related Work: What have other people done that is related to the work discussed, and why do their approaches not solve the problem at hand? * Approach/Methodology: How do the authors solve the problem? * Result: How can we be sure that the proposed approach actually solves the problem? * Conclusion: What are the limitations of the proposed work, and how could it be expanded upon in the future? --- class: small # Reading a Paper * When reading a paper, determine the answers to the questions that should be answered in each section * While the technical details can be interesting, your main focus should be on understanding the problem and the idea behind the solution * Also challenge any assumptions the authors may have made to determine if they have actually solved the problem * However, also note the good ideas in the paper * Never just assume that a problem is "not important" --- class: small # The Context of a Paper * When was the paper written? What were the available computational resources at the time? * Who wrote the paper? What is their expertise? * Where was the paper published? - Workshop: Typically experimental work, work in progress, or work without strong results - Academic Conference: In (most of) Computer Science conferences are the norm for strong, peer-reviewed publications. But not all conferences are created equal: Know your conferences! - Industry Conference: Typically shows more applied work with less focus on scientific rigor and provable results - Journals: Typically reserved for more detailed work, or extensions of conference papers - Magazines: Usually not peer-reviewed, but articles are selected by an editor --- class: small # Some Academic Conferences * Strong General AI-focused conferences: * [Association for the Advancement of AI conference (AAAI)](https://aaai.org/Conferences/AAAI/aaai.php) * [ International Joint Conference on Artificial Intelligence (IJCAI)](https://www.ijcai.org/) * Strong Game AI-focused conferences: * [Artificial Intelligence in Interactive Digital Entertainment (AIIDE)](http://aiide.org) * [Computational Intelligence in Games (CIG)](http://www.ieee-cig.org/) * Strong Games-focused conferences: * [Conference on Games (CoG)](http://www.ieee-cog.org/) * [Foundations of Digital Games (FDG)](http://www.foundationsofdigitalgames.org/) * [Computer-Human Interaction Play (CHI-Play)](https://chiplay.acm.org) * [Digital Games Research Association Conference (DiGRA)](http://www.digra.org/) --- class: small # Other Publication Venues * Some popular academic workshops for game AI research: * [Experimental AI in Games (EXAG)](http://exag.org) * [Procedural Content Generation Workshop (PCG)](https://www.pcgworkshop.com/) * [Intelligent Narrative Technologies (INT)](https://sites.google.com/ncsu.edu/intwiced18/home) * Industry-focused publication venues * [Game Developers Conference (GDC)](https://www.gdconf.com/) (Happening **right now**) * Related: [GDC Vault](https://www.gdcvault.com/) * [Gamasutra (website)](http://www.gamasutra.com/) * [Game AI Pro (book series)](http://www.gameaipro.com/) * Universities * [Institutions active in Technical Games Research](http://www.kmjn.org/game-rankings/) --- class: small # Presenting the Papers * First step: Read and understand the paper * Make sure your presentation includes the important parts: - Which problem is being solved? - How do the authors solve it - What evidence do they provide (experiment results)? - What's next? * Don't get bogged down in too much detail * Avoid formulas on slides, unless they are central to the paper * After your presentation you should also *lead* the discussion --- # Paper Discussions * What did we like about the paper? * Which application can we see for the technique? * Are there any assumptions the authors made that may need a second look? * Are there any problems with the experiment? * What are the limitations of the approach? * How can the work be expanded upon? --- # For Next Week * Read [Human Enemy AI in The Last of US](http://www.gameaipro.com/GameAIPro2/GameAIPro2_Chapter34_Human_Enemy_AI_in_The_Last_of_Us.pdf) * There is also a [GDC talk](https://www.gdcvault.com/play/1020338/The-Last-of-Us-Human) * We will discuss this paper next week! --- class: small # References * [Finite State Machines for Game AI (ES)](https://gamedevelopment.tutsplus.com/es/tutorials/finite-state-machines-theory-and-implementation--gamedev-11867) * [Halo 2 AI using Behavior Trees](http://www.gamasutra.com/view/feature/130663/gdc_2005_proceeding_handling_.php) * [A* implementations in multiple languages](https://rosettacode.org/wiki/A*_search_algorithm) * [Sliding puzzle using A*](https://blog.goodaudience.com/solving-8-puzzle-using-a-algorithm-7b509c331288) * [Potential Fields for RTS Games](http://bth.diva-portal.org/smash/get/diva2:835046/FULLTEXT01.pdf) * [Building a Chess AI with Minimax](https://medium.freecodecamp.org/simple-chess-ai-step-by-step-1d55a9266977) * [The Art of Dissecting Journal Articles](https://phdlife.warwick.ac.uk/2015/03/09/the-art-of-dissecting-journal-articles/) * [How to Read a Paper](https://web.stanford.edu/class/ee384m/Handouts/HowtoReadPaper.pdf)